Duke study shows potential for a new, accessible way to diagnose the neurological disease

DURHAM, N.C. – A form of artificial intelligence designed to interpret a combination of retinal images was able to successfully identify a group of patients who were known to have Alzheimer’s disease, suggesting the approach could one day be used as a predictive tool, according to an interdisciplinary study from Duke University.

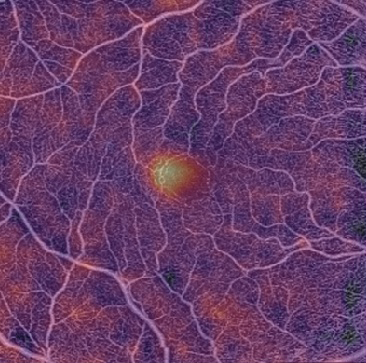

The novel computer software looks at retinal structure and blood vessels on images of the inside of the eye that have been correlated with cognitive changes.

The findings, appearing November 26, 2020 in the British Journal of Ophthalmology, provide proof-of-concept that machine learning analysis of certain types of retinal images has the potential to offer a non-invasive way to detect Alzheimer’s disease in symptomatic individuals.

“Diagnosing Alzheimer’s disease often relies on symptoms and cognitive testing,” said senior author Sharon Fekrat, M.D., retina specialist at the Duke Eye Center. “Additional tests to confirm the diagnosis are invasive, expensive, and carry some risk. Having a more accessible method to identify Alzheimer’s could help patients in many ways, including improving diagnostic precision, allowing entry into clinical trials earlier in the disease course, and planning for necessary lifestyle adjustments.”

Fekrat is part of an interdisciplinary team at Duke that also includes expertise from Duke’s departments of Neurology, Electrical and Computer Engineering, and Biostatistics and Bioinformatics. The team built on earlier work in which they identified changes in retinal blood vessel density that correlated with changes in cognition. They found decreased density of the capillary network around the center of the macula in patients with Alzheimer’s disease.

Using that knowledge, they then trained a machine learning model, known as a convolutional neural network (CNN), using four types of retinal scans as inputs to teach a computer to discern relevant differences among images.

Scans from 159 study participants were used to build the CNN; 123 patients were cognitively healthy, and 36 patients were known to have Alzheimer’s disease.

“We tested several different approaches, but our best-performing model combined retinal images with clinical patient data,” said lead author C. Ellis Wisely, M.D., a comprehensive ophthalmologist at Duke. “Our CNN differentiated patients with symptomatic Alzheimer’s disease from cognitively healthy participants in an independent test group.”

Wisely said it will be important to enroll a more diverse group of patients to build models that can predict Alzheimer’s in all racial groups as well as in those who have conditions such as glaucoma and diabetes, which can also alter retinal and vascular structures.

“We believe additional training using images from a larger, more diverse population with known confounders will improve the model’s performance,” added co-author Dilraj S. Grewal, M.D., Duke retinal specialist.

He said additional studies will also determine how well the AI approach compares to current methods of diagnosing Alzheimer’s disease, which often include expensive and invasive neuroimaging and cerebral spinal fluid tests.

“Links between Alzheimer’s disease and retinal changes -- coupled with non-invasive, cost-effective, and widely available retinal imaging platforms -- position multimodal retinal image analysis combined with artificial intelligence as an attractive additional tool, or potentially even an alternative, for predicting the diagnosis of Alzheimer’s,” Fekrat said.

In addition to Fekrat, Wisely and Grewal, study co-authors include Dong Wang, Ricardo Henao, Atalie C. Thompson, Cason B. Robbins, Stephen P. Yoon, Srinath Soundararajan, Bryce W. Polascik, James R. Burke, Andy Liu and Lawrence Carin.

The research received support from the Alzheimer’s Drug Discovery Foundation. The authors report no relevant financial interests.

This post originally appeared in Duke Health News